Vector Embeddings: The Next Frontier in SEO?

No, it's not. But let's explore Vector Embeddings together and examine their practical applications in Digital Marketing Growth and SEO.

In recent weeks, Vector Embeddings have become a hot topic in the SEO world. As a digital marketing expert, I've been closely following this trend, devouring new posts and diving deep into research. Today, let's explore this concept and evaluate its potential for SEO opportunities. But, first things first.

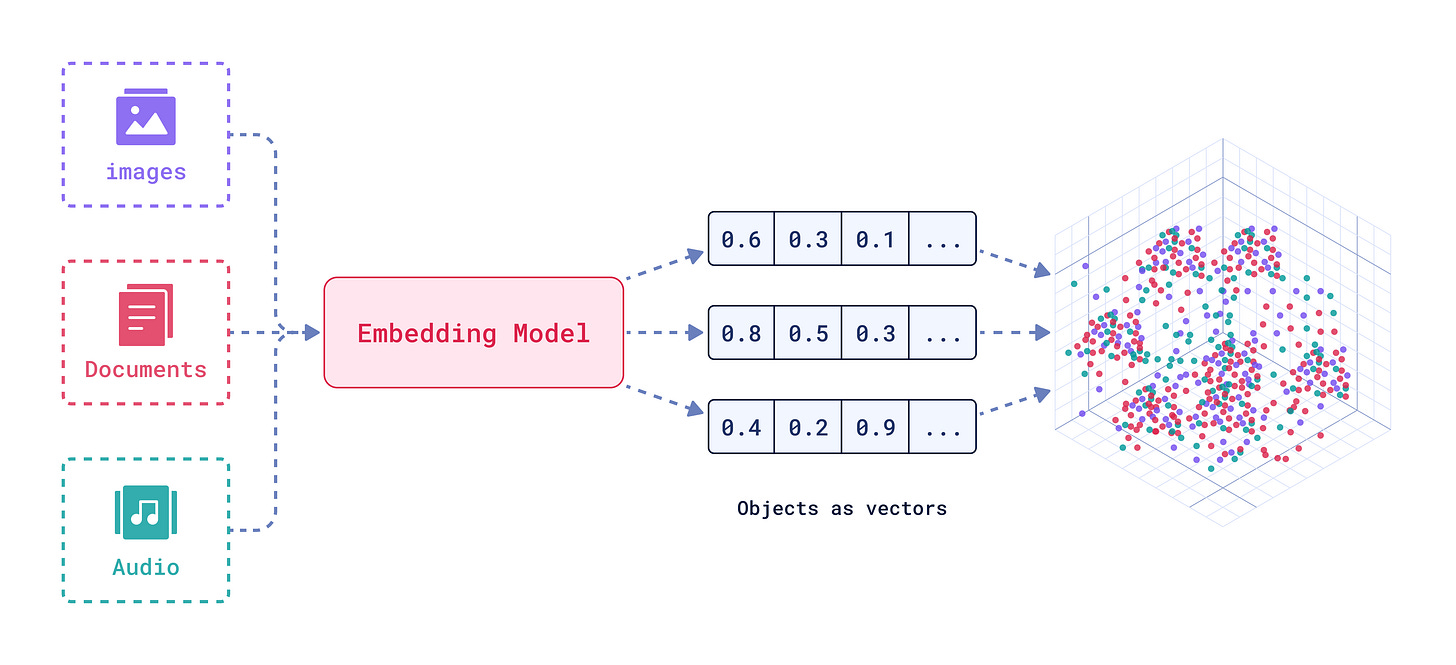

What are Vector Embeddings?

Vector embeddings are numerical representations of data in a high-dimensional space. They capture semantic meaning and relationships between entities in a machine-processable format. Here's what you need to know:

Representation: Words, sentences, or documents are mapped to dense vectors of real numbers.

Dimensionality: Typically 100-300 dimensions for word embeddings.

Example: The word "king" might be represented as [0.50, -0.23, 0.65, ..., 0.1].

Semantic relationships: Similar concepts are positioned closer together in the embedding space.

Applications: Used in various NLP tasks like machine translation, sentiment analysis, and text classification.

Semantic Relationships in Action

Vector embeddings can capture complex semantic relationships. For instance:

vector("king") - vector("man") + vector("woman") ≈ vector("queen")This operation demonstrates how embeddings can encode gender relationships within royalty concepts.

Similarity Measures

Cosine similarity is often used to measure how close two vectors are in the embedding space. For example:

cos_sim(vector("cat"), vector("dog")) > cos_sim(vector("cat"), vector("airplane"))This reflects that "cat" and "dog" are semantically closer than "cat" and "airplane".

The Connection Between Vector Embeddings and SEO

Google has been at the forefront of implementing vector embeddings in search algorithms.

Here's why it matters:

BERT and Transformers: Google's revolutionary NLP model uses contextual embeddings.

Semantic understanding: Helps Google grasp the meaning behind queries and content.

Improved search results: Leads to more relevant and accurate search outcomes.

BERT: Google's NLP Revolution

BERT (Bidirectional Encoder Representations from Transformers) marked a significant leap in NLP.

Revolutionary Aspects of BERT

Bidirectional Context: Unlike previous models, BERT considers context from both directions simultaneously.

Transfer Learning: Pre-train on general language understanding, then fine-tune for specific tasks with minimal additional data.

Masked Language Model: Innovative training approach of masking random words allows learning deep bidirectional representations.

Multi-task Learning: Applicable to various NLP tasks with minimal architectural modifications.

Impact on SEO

BERT's introduction significantly enhanced Google's ability to understand search queries and content semantics.

This means:

Better interpretation of long-tail keywords

Improved understanding of context and user intent

More accurate matching of queries to relevant content

Can We Effectively Use Vector Embeddings in SEO?

The answer is nuanced. Let's break it down:

Yes, you can:

Speak the language of machines for better optimization.

Create semantic network maps for your website.

Use AI-powered automation for advanced content optimization.

Perform in-depth webpage analysis for challenging keywords.

and no, you can’t or shouldn’t:

When Vector Embeddings might not be necessary:

If You're not doing advanced SEO:

If your website still has many basic optimization areas to improve, focus on those first.

Prioritize optimizing your web pages for visitors, ensuring a good user experience and relevant content.

When traditional SEO techniques are sufficient:

In recent years, semantic similarity has become increasingly important and well-understood.

Modern search engines, thanks to technologies like BERT and Transformers, are quite adept at recognizing semantic relationships.

By focusing on creating:

Contextually relevant internal links

Semantically appropriate backlinks

High-quality, topically coherent content

You can naturally achieve many of the benefits that vector embeddings aim to provide.

For smaller websites or limited resources:

Implementing vector embedding strategies can be resource-intensive.

For smaller sites or those with limited technical resources, traditional SEO methods can still be highly effective.

When your content is already well-optimized:

If your content or website is already semantically rich and well-structured, the additional benefit from vector embeddings might be marginal.

Practical Applications of Vector Embeddings in SEO

#1 Content Analysis with Vector Embeddings (Approach with Caution)

First of all, here is the script: https://github.com/itsbariscan/SEO-Semantix

While this approach requires careful consideration, it can offer valuable insights when used appropriately. Here's a detailed look at the process and its potential benefits:

The Process:

Content and Keyword Preparation:

Extract the main content from your webpage or article.

Identify the primary keyword or topic you're targeting.

Semantic Field Generation:

Use GPT or a similar AI model to generate two sets of semantic fields:

a) An SEO-focused semantic field (e.g., related keywords, industry terms)

b) A general semantic field (broader, more conceptual terms)

Generate about 50 terms for each field to ensure comprehensive coverage.

Vector Embedding Creation:

Use a library like Sentence Transformers to create embeddings for:

a) The main content

b) The primary keyword

c) Each term in both semantic fields

Similarity Calculations:

Calculate cosine similarity between the content vector and the keyword vector.

Compute similarities between the content vector and each term in the semantic fields.

Data Visualization:

Create interactive visualizations (e.g., using Plotly) to display:

a) Similarity scores for SEO semantic field terms

b) Similarity scores for general semantic field terms

Use color coding to highlight high and low similarity scores.

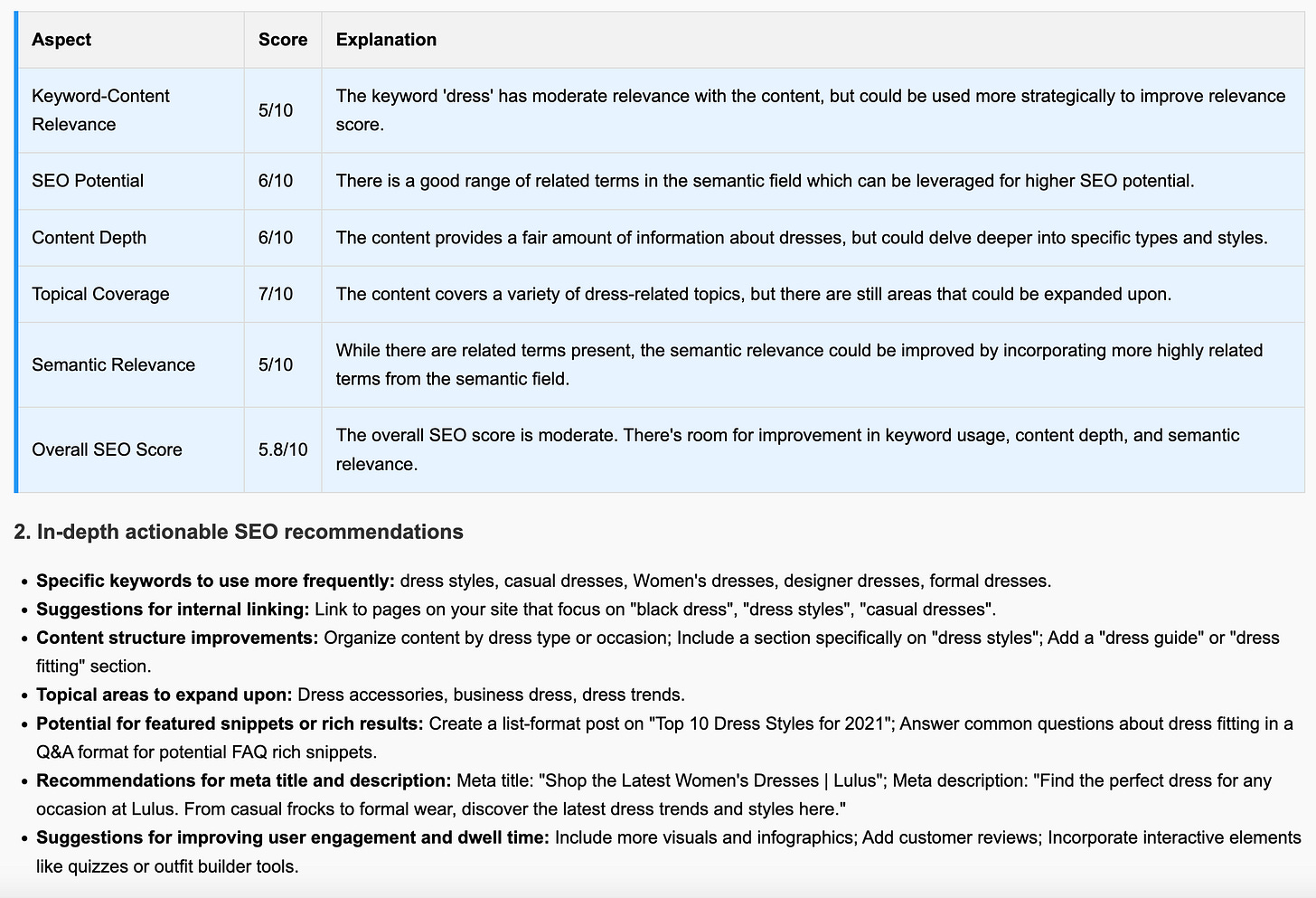

AI-Powered Analysis:

Feed the similarity scores and semantic fields into GPT-4 for a comprehensive SEO analysis.

Request insights on:

a) Content-keyword relevance

b) SEO potential

c) Content depth and breadth

d) Suggestions for improvement

Interactive Dashboard Creation:

Develop an HTML dashboard that includes:

a) Visualizations of semantic field similarities

b) The AI-generated analysis

c) Top 20 most similar terms from each semantic field

Example Use Case:

Let's say you're analyzing a blog post about "Sustainable Urban Gardening". Your analysis might reveal:

High similarity with terms like "vertical gardening", "composting", and "water conservation".

Lower similarity with broader terms like "environmental impact" or "community building".

The AI analysis might suggest:

Adding more content about the environmental benefits of urban gardening

Including practical tips for small-space gardening techniques

Linking to resources on local gardening communities or workshops

Potential Benefits:

Deep Content Understanding: Gain insights into how well your content aligns with your target topic and related concepts.

Gap Identification: Discover areas where your content might be lacking in depth or relevance.

SEO Optimization Guidance: Get specific suggestions for improving your content's SEO potential.

Competitive Analysis: Compare your content's semantic coverage with top-ranking competitors.

Limitations and Cautions:

Complexity: This approach can be technically challenging and time-consuming.

Over-optimization Risk: Blindly following similarity scores could lead to unnatural content.

Context Sensitivity: Vector embeddings might miss nuanced contextual meanings.

#2 Internal Link Opportunities with Vector Embeddings (Promising Approach)

And Github Repo for ContextBridge - Semantic Internal Link Tool: https://github.com/itsbariscan/ContextBridge-Semantic-Internal-Link-Tool

This application of vector embeddings shows significant potential for improving website structure and user experience. Let's explore this in more detail:

The Enhanced Process:

Data Collection and Preprocessing:

Crawl your website to collect URLs, H1 tags, and main content.

Clean and preprocess the text data.

N-gram Implementation:

Convert text to N-grams (e.g., 3-grams) to capture more context.

For short texts (1-2 words), keep them intact.

AI-Powered Category Determination:

Use GPT or a similar model to categorize each page based on its URL and H1 tag.

Generate relevant keywords for each page.

Vector Embedding Creation:

Create embeddings for each page using its N-gram representation.

Similarity Calculation and Filtering:

Compute cosine similarities between all page pairs.

Apply category-based filtering to ensure relevance.

Internal Link Suggestion Generation:

For each page, suggest the top 5-10 most similar pages as potential internal links.

Prioritize links within the same or closely related categories.

Automated Implementation (Optional):

Develop a system to automatically insert internal links based on the suggestions.

Include safeguards to prevent over-linking or irrelevant links.

Example Scenario:

Imagine you're running a large e-commerce site selling outdoor gear:

The system analyzes a product page for a "Lightweight Backpacking Tent".

It categorizes the page under "Camping Equipment > Tents".

The AI generates keywords like "ultralight camping", "3-season tent", "backpacking gear".

Vector similarities are calculated with other pages.

The system suggests internal links to:

A guide on "Choosing the Right Tent for Backpacking"

A product page for "Lightweight Sleeping Bags"

A blog post about "Essential Ultralight Backpacking Gear"

A category page for "Backpacking Stoves"

Benefits of This Approach:

Improved Site Structure: Creates a more logically interconnected website.

Enhanced User Experience: Helps users discover related content more easily.

SEO Boost: Improves internal PageRank distribution and topical relevance signals.

Scalability: Particularly valuable for large websites with thousands of pages.

Dynamic Updating: Can adapt to new content without manual intervention.

Implementation Tips:

Start Small: Begin with a subset of your site to test and refine the system.

Manual Review: Initially, have editors review and approve suggested links.

Monitor Performance: Track metrics like click-through rates on new internal links.

Iterative Improvement: Regularly update your embeddings and algorithms based on performance data.

Potential Challenges:

Technical Complexity: Requires significant development and computational resources.

Over-optimization Risk: Be cautious not to create too many internal links.

Content Quality Dependency: The system's effectiveness relies on having high-quality, diverse content.

Key Takeaways

Free alternatives exist: Consider Sentence Transformers if you're looking for a free option.

Don't overcomplicate: Vector embeddings shine for poorly optimized content but may not add much value to well-crafted pieces.

Prioritize automation: Large-scale websites require more sophisticated modeling and automation.

Vector embeddings aren't magic: They're a tool to help you understand machine language, not a cure-all solution.

Combine with other AI tools: Use OpenAI or Claude to interpret vector embedding data for best results.

Conclusion: The Future of Vector Embeddings in SEO

As we've seen, vector embeddings offer powerful capabilities for understanding and optimizing content. However, their application in SEO is still evolving. Here's my take on their future:

Integration with Traditional SEO: Vector embeddings will likely complement, not replace, traditional SEO techniques.

Personalization: As embeddings become more sophisticated, they could enable highly personalized search experiences.

Content Strategy Revolution: Embeddings may fundamentally change how we approach content creation and optimization.

Challenges: Balancing the technical complexity with practical SEO application will be crucial.

Remember, while vector embeddings are exciting, they're just one part of a comprehensive SEO strategy. The key is to stay informed, experiment judiciously, and always prioritize delivering value to your audience.

The necessity of vector embeddings depends entirely on your specific use case. Consider them as another tool in your SEO arsenal, not the be-all and end-all solution. As with any new technology, it's essential to evaluate its practical value for your specific needs.

In the ever-evolving world of SEO, it's not about chasing every new trend, but about finding the right mix of strategies that drive real results for your business.

Whether you decide to dive deep into vector embeddings or stick with traditional methods, always keep your focus on creating valuable, relevant content for your users. After all, that's what search engines are ultimately trying to reward.

By implementing these advanced techniques, you can leverage the power of vector embeddings to significantly enhance your website's content relevance, structure, and overall SEO performance.

The future of SEO lies in striking the right balance between cutting-edge technology and time-tested strategies.

Good luck,

Baris.